Presentation (2018) on Aether Committee, with slide

displaying initial set of Aether’s working groups.

Aether Committee

|

T |

he

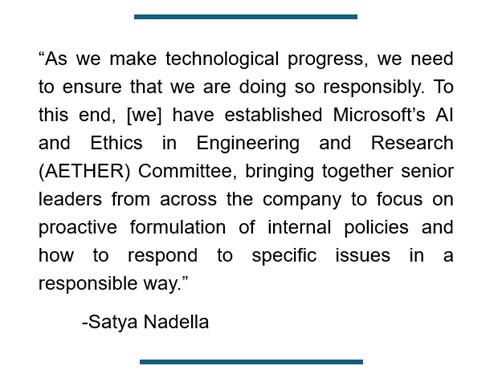

Aether committee was created to bring together top talent in AI principles and

technology, ethics, law, and policy from across Microsoft to formulate

recommendations on policies, processes, and best practices for the responsible

development and fielding of AI technologies. Aether works closely with

Microsoft’s Office of Responsible AI, a team that the Aether Committee had

helped to form in 2019.

Aether

was co-founded by Technical Fellow and Microsoft Research Director, Eric

Horvitz, and company President, Brad Smith, in 2016 and has been chaired by

Eric Horvitz. The name is an acronym for “AI and ethics in engineering and

research.” Aether and its expert working groups have played an influential role

in formulating Microsoft's approach to the responsible development and

deployment of AI technologies. Efforts

included the development and refinement of Microsoft’s AI principles and the company’s Sensitive Uses

review program.

Early Aether Committee meeting

(2018).

Aether

efforts have included deliberations of its main committee and its working

groups, co-chaired by experts in the respective area. The working groups have

continued to play a key role in investigating frontier issues, developing

points of view, creating tools, defining best practices, and providing tailored

implementation guidance related to their respective areas of expertise.

Learnings from the working groups and main committee have played an influential

role in Microsoft’s responsible AI programs and policies. Reviews and guidance

by the Sensitive Uses committee has led to the company either declining or

placing limits on specific customer engagements where AI-related risks were

high.

In

2019, the Aether Committee helped to spawn the Office

of Responsible AI (ORA), now led by former Aether Chief Counsel, Natasha

Crampton. Several functions of the Aether Committee were shifted and further

formalized within ORA, including the Sensitive Uses review program that Aether

had established earlier. The ORA team, working closely with Aether experts,

authored Microsoft’s Responsible AI Standard. The Responsible AI Standard is

structured in terms of Microsoft’s AI principles and extends the top-level

definitions of the principles into concrete actions and goals required to be

followed when Microsoft teams develop and deploy AI applications. ORA oversees

the Sensitive Uses program, the Responsible AI Standard, and efforts and

engagements on policy.

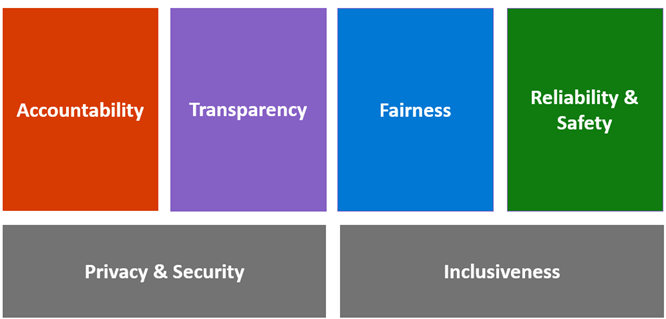

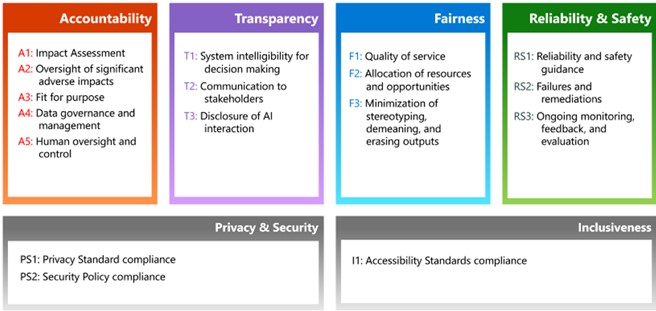

Microsoft’s six AI principles.

Microsoft’s Responsible AI Standard

provides concrete details on deliberation and actions required for deploying AI

technologies, structured in accordance with Microsoft’s AI principles.

In

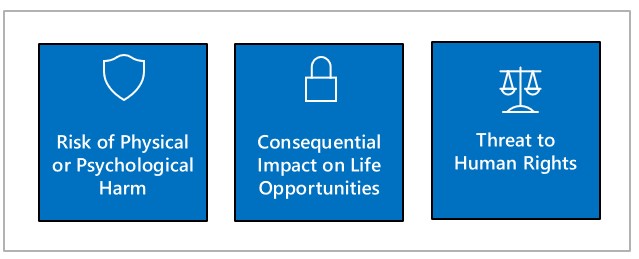

the first year of the Aether Committee (2017), the Aether Sensitive Uses

working group proposed a set of criteria to be used to determine if an AI

application is to be considered “sensitive” and should thus be routed before

deployment into a special process involving careful review, guidance, and

potential gating by a special Sensitive Uses review committee. The definition

of sensitive uses of AI has been remarkably stable over the years since the

considerations were first proposed.

Aether stood up a program and process referred to as the Sensitive Uses

Review, now overseen and operated by the Office of Responsible AI. The

Sensitive Uses review committee has members with expertise spanning multiple

areas. The committee considers potential

costly behaviors, uses, and impact of AI applications under review. Discussion

includes a deep dive into the case at hand, consideration of precedent

developed in discussions and decisions on earlier cases, and the development of

guidance for the product team as well as the company.

When

Sensitive Uses was operated as part of the Aether Committee, challenging and

novel cases considered by the Sensitive Uses working group were subsequently

presented to the main Aether Committee for committee-wide discussion comment

and refinement. Where standing questions and new considerations were

identified, the Aether committee engaged with Microsoft’s Senior Leadership

team for input, sharing case summaries and results of the discussions from the

Sensitive Uses committee and main Aether Committee, often with the inclusion of

multiple options and associated assessed tradeoffs.

Criteria for categorizing an AI

application as a sensitive and requiring special study and deliberation.

New

proposed AI products or services at Microsoft are considered sensitive uses

of AI if their use or misuse has a significant probability of (1) causing

significant physical or psychological injury to an individual; (2) of having a consequential negative impact

on an individual’s legal position or life opportunities; that is, the use or

misuse of the AI system could affect an individual’s legal status, such as

whether an individual is recognized as a minor, adult, parent, guardian, or

person with a disability, as well as their marital, immigration, and

citizenship status, and could affect their legal rights (e.g., in the context

of the criminal justice system), or could influence their ability to gain

access to credit, education, employment, healthcare, housing, insurance,

and social welfare benefits, services, or opportunities, or the terms on

which they are provided; or (3) restricting, infringing upon, or undermining

the ability to realize an individual’s human rights, including the following:

·

Human dignity and equality in enjoyment

of rights.

·

Freedom from discrimination.

·

Life, liberty, and security of the

person.

·

Equal protection of the law and criminal

justice systems.

·

Protection against arbitrary interference

with privacy.

·

Freedom of movement.

·

Freedom of thought, conscience, and

religion.

·

Freedom of opinion and expression.

·

Peaceful assembly and association.

The

three criteria have provided a strong foundation over the years and form the

basis for routing projects into a Sensitive Uses review, now overseen by the

Office of Responsible AI.

Today,

with the Office of Responsible AI taking on the oversight and management of the

Sensitive Uses review program, the Responsible AI Standard and the company’s

adherence to its requirements, and external engagements on policy, Aether and

its working groups have shifted to focus on its advisory role on frontier

topics and rising challenges. The Aether Committee focuses on providing

guidance to senior leadership, the Office of Responsible AI, and our

responsible AI engineering teams on rising questions, challenges, and

opportunities in the development and deployment of AI technologies. Experts and

program management on Aether collaborate closely with the Office of Responsible

AI and both teams coordinate with engineering efforts and sales teams to help them

uphold Microsoft’s AI principles in their day-to-day work and with projects

involving AI products and services.

Aether has

continued to organize special deep-dive offsites and studies, bringing together

teams of experts on specific challenges, opportunities, and directions with the

responsible development and deployment of AI systems. These special studies

include Aether strategic focus projects on emerging topics and issues in

the responsible deployment of AI technologies. For example, the 2019 Aether

Media Provenance (AMP) strategic focus project pursued technical methods for

certifying the integrity and authenticity of media in a world of rising

AI-generated abusive content. The project led to the development of core methods for media provenance. The

project resulted in the co-founding of Project Origin

with the BBC, CBC, and NYTimes, and later the Coalition for Content Provenance and

Authenticity (C2PA) and today’s Content Credentials

standard. C2PA now includes over 100 companies, and supports a broad ecosystem

of over 2,000 organizations, including manufacturers of cameras, content

producers, technology companies, and non-profit organizations.

Aether Media Provenance (AMP) contributors strategic

focus team on visit to BBC headquarters that resulted in the creation of

Project Origin (2019), a collaboration between BBC, CBC, Microsoft, and the New

York Times. Left to right: Rico Malvar, Eric Horvitz, Paul England, Cedric

Fournet.

Aether

also continues to also organize and lead cross-company studies, referred to as

Laser studies. With Laser studies, cross-team groups of experts are assembled

and work for weeks to months on deep-dive analyses and guidance on emerging,

potentially disruptive AI technologies, including the rise of new

fundamental capabilities or new, potentially impactful and disruptive

applications. The analyses and results of Laser studies are shared with

Microsoft senior leadership. Laser studies consider both short-term needs and

mitigations well as recommendations for standing up longer-term R&D and

processes.

As

examples, influential Laser studies and reports were commissioned to study

pre-release versions of GitHub Copilot and GPT-4 and provided guidance to the

respective product teams months in advance of these models become generally

available. Laser studies have examined capabilities, identified concerns about

potentially costly behaviors, and have recommended additional research,

mitigations, and follow-on workstreams for continuing review and revision.