Eric Horvitz

Adaptive Systems & Interaction

Microsoft Research

Redmond, Washington 98052-6399

The Lumiere project at Microsoft Research was initiated in 1993 with the goal of developing methods and an architecture for reasoning about the goals and needs of software users as they work with software. At the heart of Lumiere are Bayesian models that capture the uncertain relationships between the goals and needs of a user and observations about program state, sequences of actions over time, and words in a user's query (when such a query has been made). Ancestors of Lumiere include our earlier research on probabilistic models of user goals to support the task of custom-tailoring information displayed to pilots of commercial aircraft, and related work on user modeling for the decision-theoretic control of displays that led to systems that modulate data displayed to flight engineers at the NASA Mission Control Center. These projects were undertaken while several of us were based at Stanford University.

The first Lumiere prototype was completed in late 1993 and became a demonstration system for communicating with program managers and developers in the Microsoft product groups. Here is a video demonstration of a Lumiere prototype for Excel that was used to communicate the potential for technologies like Lumiere within Microsoft. Later versions of Lumiere explored a variety of extensions including richer user profiling and autonomous actions.

Early on in the Lumiere project, studies were performed in the Microsoft usability labs to investigate key issues in determining how best to assist a user as they worked. The studies were aimed at exploring how experts in specific software applications worked to understand problems that users might be having with software from the user's behaviors. We also sought to identify the evidential distinctions that experts appeared to take advantage of in their reasoning about the best way to assist a user. Such information was partly obtained from protocol analysis of videotapes and transcripts of the thoughts verbalized by users as well and of the experts that were trying to assist these users in a number of Wizard of Oz studies.

A cognitive psychologist running a study at the Microsoft usability labs. Usability studies have played a significant role in the Lumiere project. More generally, over 25,000 hours of usability studies were invested in Office '97.

The Lumiere Wizard of Oz studies helped to elucidate important distinctions that were later woven into Bayesian networks. Later, usability studies were employed to test the actual performance of the Office Assistant, and user reactions to different versions of the interface.

The Lumiere prototypes have explored the combination of a Bayesian perspective on integrating information from user background, user actions, and program state, along with a Bayesian analysis of the words in a user's query. A Bayesian methodology for considering the likelihoods of alternative concepts given a query was developed at Microsoft Research, in collaboration with the Office product group in 1993. This Bayesian information-retrieval component of Lumiere was shipped in all of the Office '95 products as the Office Answer Wizard.

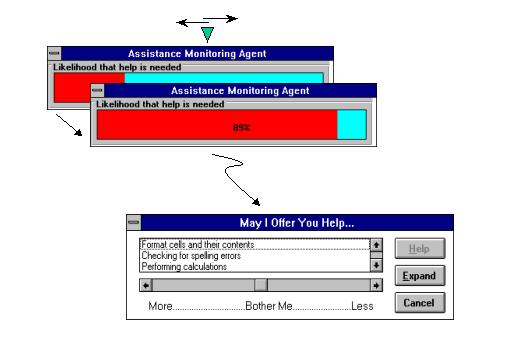

The earliest Lumiere prototypes combined an analysis of actions and words when they are available. Lumiere continues to monitor events with a event system that combines atomic actions into higher-level modeled events. The modeled events are variables in a Bayesian model. An event language was developed for building modeled event filters. As a user works, a probability distribution is generated over areas that the user may need assistance with. A probability that the user would not mind being bothered with assistance is also computed. The figure below shows a snapshot of Lumiere's reasoning about a users needs.

Folding in the consideration of words in a user's query. The probability distribution inferred about a user's needs is revised with the consideration of words in the user's query.

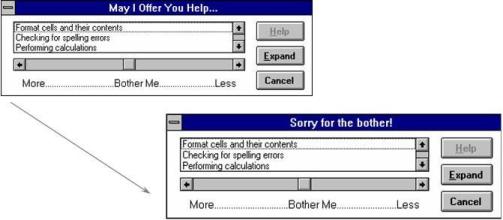

Although most of our effort was focused on building an intelligent query service for interpreting natural queries---including the leveraging of dynamically sensed context about user activity and user competencies---we have also been interested in the question of deliberating about when to step forward to assist a user. We believe strongly that such intrusions should be done in a careful and conservative manner, with the express approval of users. We built versions of the Lumiere prototype that employ Bayesian models to control such "speculative assistance" actions, coupled with user-interface designs that showed promise for minimizing disruption and for putting the user in control of interruptions. On putting the user in control, we designed a speculative assistance display that included a prominantly featured "volume control" for interruptions; the volume control would always be available whenever such advice would appear. Such a volume control allowed users to move a slider in a "bother me less" or "bother me more" direction, changing the threshold at which active assistance would be provided. Thus, users could wrap the help system around their own preferences for the value of assistance. We also focused on the design of noninstrusive displays for speculative actions to provide assistance. For example, we examined the use of small windows that would not steal system focus, and that would time out with a gentle apology if user's did not hover over or interact with the recommendations. We also designed a "background assistance tracking" feature that would simply watch in the background as a user worked. An analysis, including such compilations as a custom-tailored set of readings, would be made available for review or printing when the user requested such an overall critiquing, or context-sensitive assistance manual.

We show here how a small assistance window appears without stealing system focus. The speculative window containing the inferred best assistance appears after the inferred "likelihood that the user will desire assistance" exceeds a user-set threshold. The window times out with a brief apology ("Sorry for the Bother..." appears in the window title screen before the window disappears) if the user does not hover or interact with the assistance in any other way.

During the course of Lumiere research, we have considered alternative user-interface metaphors for acquiring information from users and for sharing the results of Bayesian inference with users. Beyond experimenting with embedded actions and traditional windowing and dialog boxes, we have been interested in character-based interfaces as a way to provide a natural way to centralize assistance services. We built some animations in the early days of Lumiere to demonstrate basic interactions with a Bayesian-minded character (you can view some frames from an early demonstration animation...).

The Office Assistant in the Office '97 and Office 2003 product suites was based in spirit on the Lumiere and on prior research efforts that had led to the Answer Wizard help retrieval system in Office '95. Office committed to a character-based assistant. Users were able to choose one of several assistants each of whom had a variety of behavioral patterns--all of whom draw their search intelligence--that is their ability to interpret context and natural language queries from Bayesian user models. This component of the Office Assistant system is based on our research--but is only available when the user engages the system on their own.

The Office team has employed a relatively simple rule-based system on top of the Bayesian query analysis system to bring the agent to the foreground with a variety of tips. We had been concerned upon hearing this plan that this system would be distracting to users--and hoped that future versions of the Office Assistant would employ our Bayesian approach to guiding speculative assistance actions--coupled with designs we had demonstrated for employing nonmodal windows that do not require dismissal when they are not used.

Email: horvitz@microsoft.com

Back to Eric Horvitz's home page.